What are embeddings?

If you have worked with AI tech before (or read about it), you might have come across the term “embeddings”. For non-technical people, “embeddings” can sound like a very abstract and complex concept. But it’s actually a simple idea if you understand the fundamentals. In this post, I’ll explain in basic terms what embeddings are and how they can be used.

What is text embedding?

Imagine you’re trying to teach a computer what words mean. But instead of using a dictionary, you give each word a unique set of numbers that capture its meaning. These sets of numbers are called “embeddings”.

Okay, but why numbers?

Computers don’t understand words like we do - they understand numbers. So we translate words, sentences, or even whole paragraphs into numbers, in a smart way, so that:

- Similar meanings = similar numbers

- Like: “happy” and “joyful” get numbers that are close to each other.

- Different meanings = very different numbers

- Like: “cat” and “airplane” get very different numbers.

How Do Embeddings Work?

Embeddings are typically generated using advanced deep learning models that process vast amounts of text. These models learn the underlying patterns and relationships between words, effectively mapping them into a high-dimensional vector space. Although the mathematics behind these techniques is complex, the essential idea is straightforward: words with similar meanings are placed close together in this space, making it easier for machines to interpret language contextually.

An Example

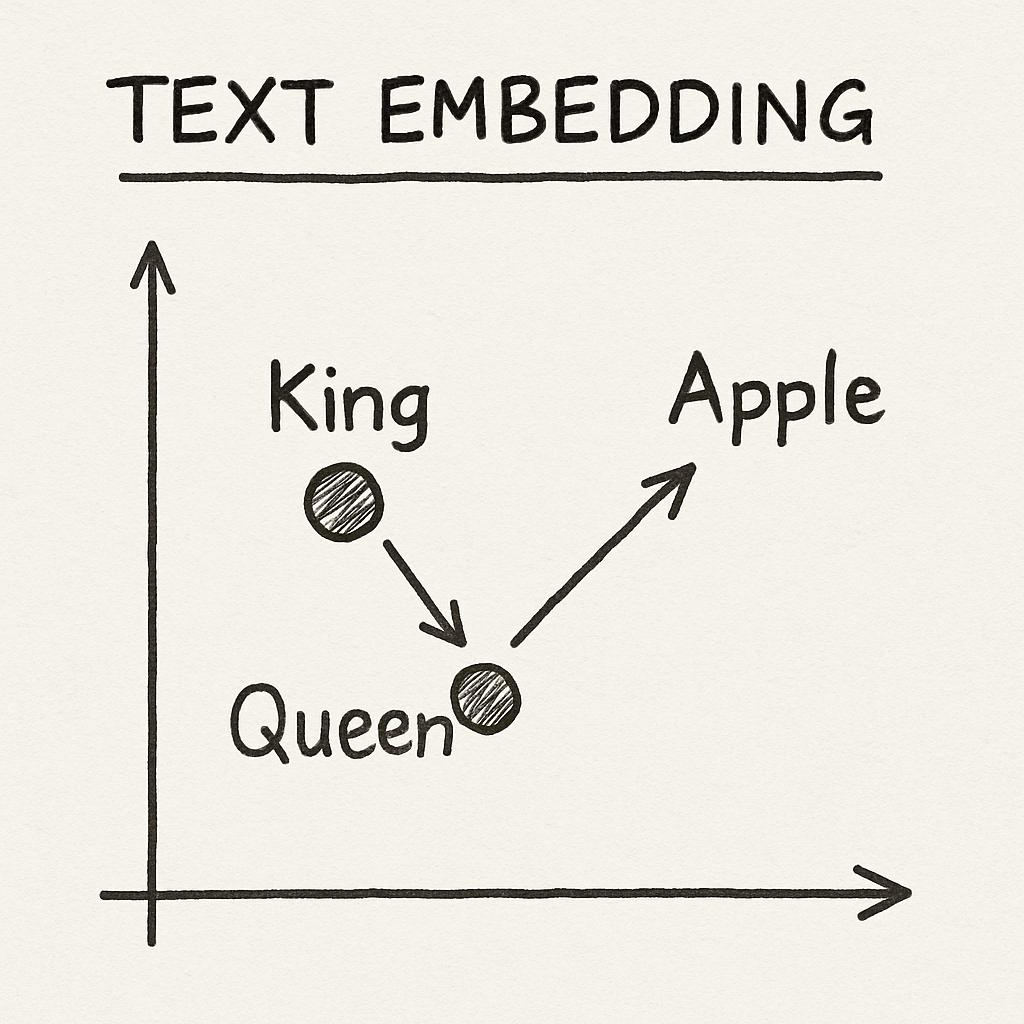

Let’s say we want to embed these words:

- “King”

- “Queen”

- “Apple”

After embedding, the computer might represent them like this:

- King → [0.9, 0.2, 0.5]

- Queen → [0.88, 0.19, 0.52]

- Apple → [0.1, 0.7, 0.3]

You can see “King” and “Queen” have very similar numbers, because they are related. But “Apple” is quite different.

This is an oversimplification, but it gives you an idea of how embeddings work.

What do we use this for?

Text embeddings are used in a variety of ways:

- Smart Search: They power search engines that understand the intent behind your queries, which improves the relevancy of the search results.

- Content Recommendations: Since it understands the intent of words better, it helps in grouping similar topics, which can improve personalized recommendations.

- Chatbots & Virtual Assistants: They enable more natural, context-aware conversations by understanding the meaning behind words.

- Data Organization: They assist in clustering and classifying large volumes of text data so that it can be more easily analyzed.

Conclusion

With the rise of AI, embeddings have become an essential tool for many applications, making it easier for machines to understand and process natural language contextually.

I hope this explanation of embeddings has shed light on how converting words into numerical representations can be used for smarter search, recommendations, and more. If you have any questions, feel free to reach out on X or LinkedIn.